VBS Scheduler with zero overhead

We introduce and use variable-bandwidth servers (VBS) for scheduling and executing processes under programmable temporal isolation. A VBS is an extension of a constant-bandwidth server where throughput and latency of process execution can not only be controlled to remain constant across different competing workloads but also to vary in time as long as the resulting bandwidth stays below a given bandwidth cap. Switching to different periods allows to trade off scheduling overhead and temporal isolation at runtime. Smaller periods and thus higher sampling frequencies better isolate servers because their response times for executing a given piece of code are better maintained across larger sets of server workloads, at the expense of higher administrative overhead through more scheduler invocations.

We have designed and implemented a VBS-based EDF-style constant-time scheduling algorithm, a constant-time admission test, and four alternative queue management plugins which

influence the scheduling algorithm’s overall temporal and spatial complexity. The schedulability test and scheduling algorithm for workload-oriented programming have a focus on reducing runtime overhead and improving predictability by trading off schedulability precision (test) as well as system utilization (algorithm) for less time complexity of managing process admission and more predictable administrative overhead of making scheduling decisions, respectively. In other words, the schedulability test may reject some schedulable processes but may be performed fast (in constant time with a fixed number of resources) and the scheduling algorithm may interrupt the system more frequently but does so predictably often and makes scheduling decisions fast (in constant time with a fixed timeline but for any number of processes).

Implementation

We have implemented our scheduling algorithm and benchmarked it on synthetic workloads. The scheduling algorithm essentially manages a deadline-ordered set of released processes (ready queue) and a release-time-ordered set of delayed processes (blocked queue). Currently our implementation supports doubly-linked lists, time-slot arrays of FIFO queues, and a time-slot matrix of FIFO queues, and an alternative FIFO matrix representation using B+ trees that reduce memory usage.

The algorithm's time complexity is dominated by the queue operations' time complexities since the algorithm itself is loop-free and therefore runs in constant time.

Results

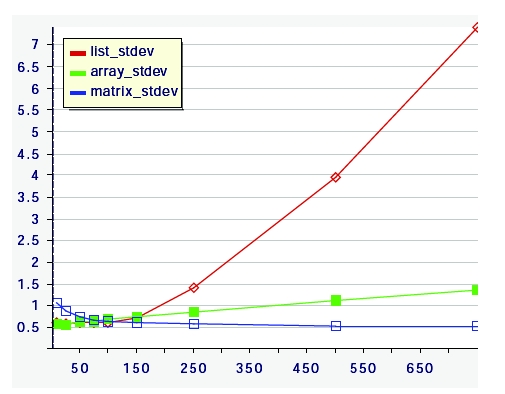

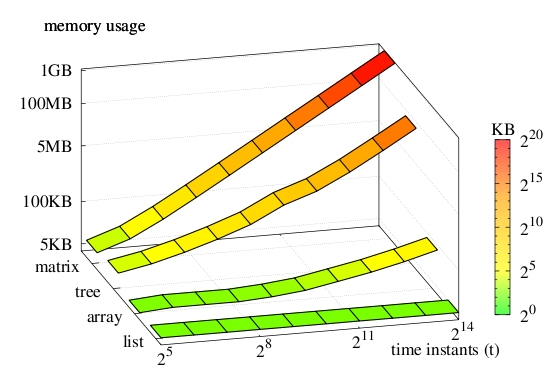

Figure 1 shows the standard deviation of the execution time of the scheduler with different number of processes and Figure 2 shows the memory usage for the implemented plugins in terms of time instants.

Figure 1: Standard deviation |

Figure 2: Memory usage |

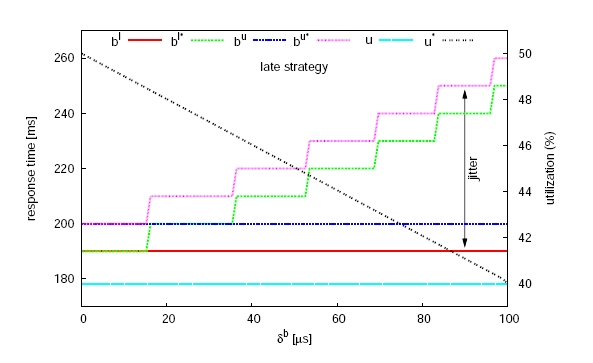

VBS Scheduler with non-zero overhead

An important aspect of the scheduler is the scheduling overhead which has to be accounted for in order to guarantee the temporal properties of the system. We propose two complementary methods to account for scheduler overhead in the schedulability analysis of Variable Bandwidth Servers (VBS). We first determine an upper bound on the number of scheduler invocations that may occur during a given amount of time. This is possible because process release and suspend times are known in VBS. Given the number of preemptions in a period of a VBS process, we show that there are two complementary methods to account for scheduler overhead, either by decreasing the speed at which processes run to maintain CPU utilization (called response accounting), or by increasing CPU utilization to maintain the speed at which processes run (called utilization accounting). Response accounting decreases the net server bandwidth available to a process by dedicating some of its bandwidth to the scheduler. Utilization accounting increases the server bandwidth to maintain the net bandwidth available to a process. In other words, with utilization accounting, the bounds on response times are maintained while CPU utilization is increased whereas, with response accounting, the upper bounds on response times and thus on jitter are increased while utilization is maintained. Both methods can be combined by handling an arbitrary fraction of the total scheduler overhead with one method and the rest with the other. We also show, by example, that the fraction may be chosen within server- and process-dependent intervals such that CPU utilization decreases while the response-time bounds and thus the speed at which processes run remain the same, as can be seen in Figure 3.

Figure 3: Response time and utilization when the fraction of response accounted overhead varies in [0, 100] using the late strategy

Contact: Silviu Craciunas

Download

- 29 Jul 2008: tiptoe-scheduler-0.2.tar.gz, README (also included in the tarball)